Teacher Story: Assessment in the Age of AI – Innovations in the course Advocatuur en Beroepsethiek

Testing in the Age of AI – Innovations in the course Advocatuur en Beroepsethiek

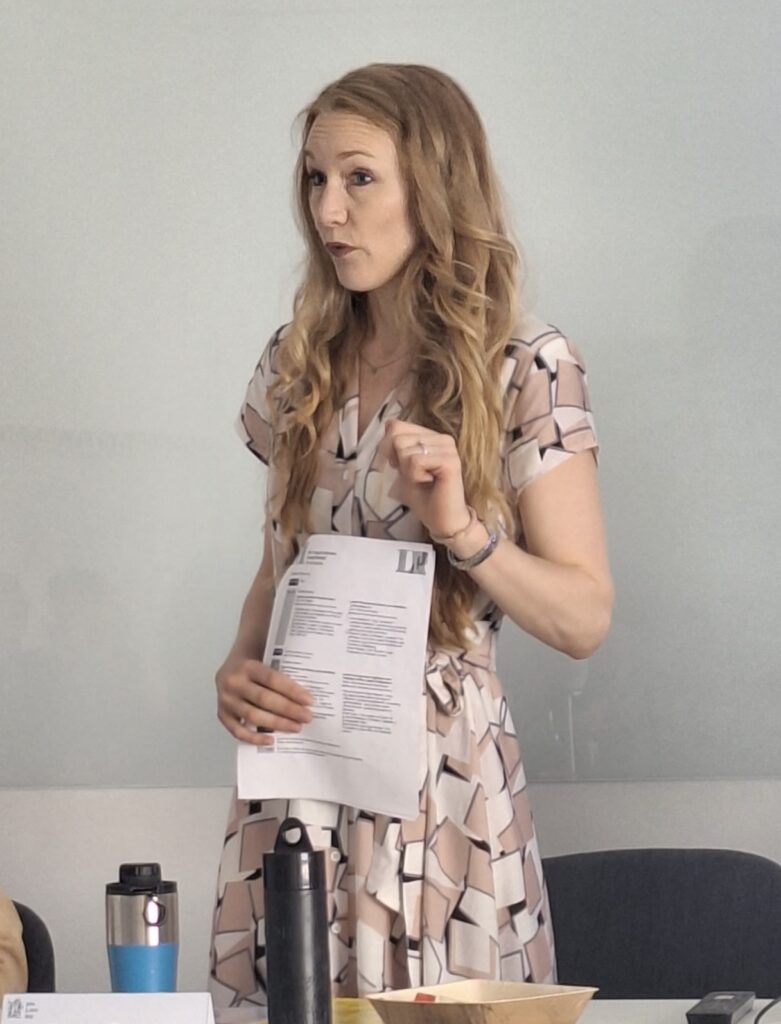

Since the advent of generative AI, such as ChatGPT, education faces a new challenge: how can teachers ensure that their assessments are AI-proof? Tamara Butter and Iris van Domselaar encountered this same challenge in the elective course Advocatuur en Beroepsethiek, part of the master’s programs in Private, Employment, and Public Law. They revamped their assessment approach to make it more AI-resistant. In this article, you’ll learn how they turned this into a success.

AI-Proof Testing

Previously, the course Advocatuur en Beroepsethiek concluded with two essays. With the rise of ChatGPT, Tamara Butter and Iris van Domselaar felt this approach needed to be reconsidered. They explored various ways to assess learning objectives differently. They also collaborated with TLC FdR and TLC Central. Ultimately, they decided to end the course with a duo essay, focused on lectures by guest speakers, and an oral exam.

Course Outline

| The course was divided into lectures and seminars. During the lectures, various guest speakers were invited to share their perspectives on the role of lawyers. Based on the information from the first four guest lectures, which covered internal perspectives, students were required to formulate and answer a research question in the duo essay. By making the essay assignment so specific, the usefulness and feasibility of using generative AI were limited. | In the following weeks, external perspectives were also covered during the guest lectures. The course concluded with an individual oral exam. Here, students could demonstrate their expertise on a self-chosen ethical issue within the profession and answer questions covering the entire course material. This approach supported students’ autonomy in selecting an issue that resonated with them while also assessing to what extent they had mastered the overall material. |

Where Do You Find the Time?

|

One of the first things that come to mind with an oral exam is: “Where do you find the time?” According to Tamara, it’s true that oral exams take time, but both she and Iris found it valuable. Not only did it allow them to assess in an AI-resistant way, but it also enabled them to continue the discussions initiated in seminars and lectures through the assessment. This ensured that the learning objectives, which focus mainly on evaluation and analysis, were appropriately assessed. The oral exams were spread over three weeks, with each session lasting 20 minutes. All in all, it was quite a time investment, but on the other hand, grading an essay or written exam also takes time—and you miss the personal interaction with the students. |

Results

| The new course format was enthusiastically received by students. Over 95% of them were very satisfied with the learning experience of the oral exam. A student commented, “Since we had already practiced evaluating normative theories in the duo essay, I was better prepared for the oral exam.” Another added, “It was great that students had so much freedom for personal input in this course.” |

|

Recommendations

According to both Tamara and the students, a vulnerability of the oral exam is that questions can be shared among students. This could benefit those taking the exam later in the period. Although the teachers use a set of questions and rotate them, the system is not entirely foolproof. An interesting challenge for next year!

Tamara emphasizes that the oral exam may not be a suitable AI-resistant alternative for every course. For this course, it aligned well with the type of learning objectives, Tamara was willing to invest the necessary time, and the class size allowed for oral exams. By implementing the oral exam, they not only made assessments more AI-resistant, but also better aligned the assessment with the course’s learning objectives: an educational innovation with a win-win result.

Would you like to learn more about GenAI & Assessment or GenAI in general? Read one of the articles below or contact TLC-FdR.