Piloting an Automated Feedback Pipeline with AI

Luke Korthals

Beyond the Chatbox: Piloting an Automated Feedback Pipeline with AI – Luke Korthals

For many teachers, especially in large courses, providing detailed, timely feedback on assignments can feel like an impossible task. There simply aren’t enough hours in the week. Sometimes, we even shy away from including formative assessments into such large course, knowing there will be no time to provide feedback that would make them useful. Well, what if you could build a system to do it for you?

This was the ambitious goal for Luke Korthals, a teacher in the Research Master’s course Programming in Psychological Science. Going far beyond the standard chat interface, Luke used the UvA AI Chat’s Application Programming Interface (API) to create a fully automated feedback pipeline. His innovative project provides a powerful glimpse into the future of educational technology, along with a crucial warning for its use in the present.

Building an Automated Assistant

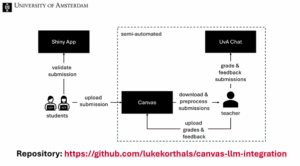

Luke’s project connected the UvA AI Chat directly with Canvas to create a seamless workflow for weekly programming assignments. Here’s how it worked:

- A custom script downloaded all student submissions from Canvas.

- It then prompted UvA AI Chat via the API to analyze each submission.

- The Chat generated a detailed, multi-page PDF feedback report for every student.

- Finally, the script automatically uploaded each unique report back to the student in Canvas.

While the initial setup took about a month, Luke notes the system is highly reusable and could be adapted for a new course in about a day.

The Power of Feedback at Scale

The primary goal of the pilot was to provide feedback where, realistically, teachers have no time to do so. “The fact is simply that human teachers don’t have time to give any feedback, so it could be a valuable addition to the course,” Luke explains.

The feedback itself was designed to be robust. Inspired by educational research, the prompts guided the AI to provide specific, error-driven advice. Luke even programed innovative features, such as an AI-generated coding challenge in each report for extra practice, as well as personalized suggested questions the student could ask in class about a difficult topic, empowering them to seek further help.

A Crucial Warning: The Pitfalls of AI Grading

As part of the research project Luke was conducting, the AI was also tasked to assign a preliminary grade. This is where the project uncovered a critical insight: Luke strongly advises against using AI for summative grading, a practice that is also not allowed under current UvA AI policy.

His research revealed a “systematic bias for lower grades.” When comparing the AI’s grades with those of four different human graders, the AI consistently awarded lower scores than the humans did. The fact that the system was underscoring in this particular project does not, of course, mean it will always do this. There is other research in which LLMs overscored or were quite good. “Based on the evidence that we have right now, fully automated large language model grading is not accurate enough to be implemented blindly for all assignments and all courses,” Luke cautions. “I highly advise teachers against cutting corners and letting LLMs grade their students.”

The Takeaway: A Tool for Support, Not Judgment

Luke’s pilot makes a clear distinction: the UvA AI Chat, at this point in its development, at least, is a potentially revolutionary tool for feedback, but a flawed and biased tool for grading.

His advice for colleagues is to explore using the UvA AI Chat to give students the support they otherwise wouldn’t get, especially in large, skill-based courses. For those with the technical skill, creating an automated pipeline offers a powerful path forward. But for now, the final judgment on student work must remain firmly in human hands.

Looking ahead, Luke is optimistic about “human in the loop” solutions, where AI assists with grading, but teachers audit the results. This could free up countless hours, allowing educators to invest in what truly matters: getting the student to the knowledge (through activities such as one-on-one tutoring and direct student support) rather than assessing whether they got there.